[Click here for a PDF of this post with nicer formatting]. Note that this PDF file is formatted in a wide-for-screen layout that is probably not good for printing.

These notes build on and replace those formerly posted in Energy and momentum for assumed Fourier transform solutions to the homogeneous Maxwell equation.

Motivation and notation.

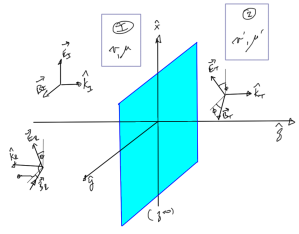

In Electrodynamic field energy for vacuum (reworked) [1], building on Energy and momentum for Complex electric and magnetic field phasors [2], a derivation for the energy and momentum density was derived for an assumed Fourier series solution to the homogeneous Maxwell’s equation. Here we move to the continuous case examining Fourier transform solutions and the associated energy and momentum density.

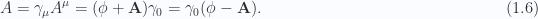

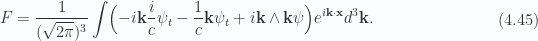

A complex (phasor) representation is implied, so taking real parts when all is said and done is required of the fields. For the energy momentum tensor the Geometric Algebra form, modified for complex fields, is used

The assumed four vector potential will be written

Subject to the requirement that  is a solution of Maxwell’s equation

is a solution of Maxwell’s equation

To avoid latex hell, no special notation will be used for the Fourier coefficients,

When convenient and unambiguous, this  dependence will be implied.

dependence will be implied.

Having picked a time and space representation for the field, it will be natural to express both the four potential and the gradient as scalar plus spatial vector, instead of using the Dirac basis. For the gradient this is

and for the four potential (or the Fourier transform functions), this is

Setup

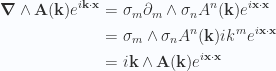

The field bivector  is required for the energy momentum tensor. This is

is required for the energy momentum tensor. This is

This last term is a spatial curl and the field is then

Applied to the Fourier representation this is

It is only the real parts of this that we are actually interested in, unless physical meaning can be assigned to the complete complex vector field.

Constraints supplied by Maxwell’s equation.

A Fourier transform solution of Maxwell’s vacuum equation  has been assumed. Having expressed the Faraday bivector in terms of spatial vector quantities, it is more convenient to do this back substitution into after pre-multiplying Maxwell’s equation by

has been assumed. Having expressed the Faraday bivector in terms of spatial vector quantities, it is more convenient to do this back substitution into after pre-multiplying Maxwell’s equation by  , namely

, namely

Applied to the spatially decomposed field as specified in (2.7), this is

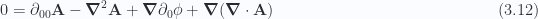

All grades of this equation must simultaneously equal zero, and the bivector grades have canceled (assuming commuting space and time partials), leaving two equations of constraint for the system

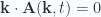

It is immediately evident that a gauge transformation could be immediately helpful to simplify things. In [3] the gauge choice  is used. From (3.11) this implies that

is used. From (3.11) this implies that  . Bohm argues that for this current and charge free case this implies

. Bohm argues that for this current and charge free case this implies  , but he also has a periodicity constraint. Without a periodicity constraint it is easy to manufacture non-zero counterexamples. One is a linear function in the space and time coordinates

, but he also has a periodicity constraint. Without a periodicity constraint it is easy to manufacture non-zero counterexamples. One is a linear function in the space and time coordinates

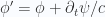

This is a valid scalar potential provided that the wave equation for the vector potential is also a solution. We can however, force  by making the transformation

by making the transformation  , which in non-covariant notation is

, which in non-covariant notation is

If the transformed field  can be forced to zero, then the complexity of the associated Maxwell equations are reduced. In particular, antidifferentiation of

can be forced to zero, then the complexity of the associated Maxwell equations are reduced. In particular, antidifferentiation of  , yields

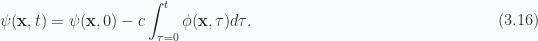

, yields

Dropping primes, the transformed Maxwell equations now take the form

There are two classes of solutions that stand out for these equations. If the vector potential is constant in time  , Maxwell’s equations are reduced to the single equation

, Maxwell’s equations are reduced to the single equation

Observe that a gradient can be factored out of this equation

The solutions are then those  s that satisfy both

s that satisfy both

In particular any non-time dependent potential  with constant curl provides a solution to Maxwell’s equations. There may be other solutions to (3.19) too that are more general. Returning to (3.17) a second way to satisfy these equations stands out. Instead of requiring of

with constant curl provides a solution to Maxwell’s equations. There may be other solutions to (3.19) too that are more general. Returning to (3.17) a second way to satisfy these equations stands out. Instead of requiring of  constant curl, constant divergence with respect to the time partial eliminates (3.17). The simplest resulting equations are those for which the divergence is a constant in time and space (such as zero). The solution set are then spanned by the vectors

constant curl, constant divergence with respect to the time partial eliminates (3.17). The simplest resulting equations are those for which the divergence is a constant in time and space (such as zero). The solution set are then spanned by the vectors  for which

for which

Any  that both has constant divergence and satisfies the wave equation will via (2.7) then produce a solution to Maxwell’s equation.

that both has constant divergence and satisfies the wave equation will via (2.7) then produce a solution to Maxwell’s equation.

Maxwell equation constraints applied to the assumed Fourier solutions.

Let’s consider Maxwell’s equations in all three forms, (3.11), (3.20), and (3.22) and apply these constraints to the assumed Fourier solution.

In all cases the starting point is a pair of Fourier transform relationships, where the Fourier transforms are the functions to be determined

Case I. Constant time vector potential. Scalar potential eliminated by gauge transformation.

From (4.24) we require

So the Fourier transform also cannot have any time dependence, and we have

What is the curl of this? Temporarily falling back to coordinates is easiest for this calculation

This gives

We want to equate the divergence of this to zero. Neglecting the integral and constant factor this requires

Requiring that the plane spanned by  and

and  be perpendicular to

be perpendicular to  implies that

implies that  . The solution set is then completely described by functions of the form

. The solution set is then completely described by functions of the form

where  is an arbitrary scalar valued function. This is however, an extremely uninteresting solution since the curl is uniformly zero

is an arbitrary scalar valued function. This is however, an extremely uninteresting solution since the curl is uniformly zero

Since  , when all is said and done the

, when all is said and done the  ,

,  case appears to have no non-trivial (zero) solutions. Moving on, …

case appears to have no non-trivial (zero) solutions. Moving on, …

Case II. Constant vector potential divergence. Scalar potential eliminated by gauge transformation.

Next in the order of complexity is consideration of the case (3.22). Here we also have  , eliminated by gauge transformation, and are looking for solutions with the constraint

, eliminated by gauge transformation, and are looking for solutions with the constraint

How can this constraint be enforced? The only obvious way is a requirement for  to be zero for all

to be zero for all  , meaning that our to be determined Fourier transform coefficients are required to be perpendicular to the wave number vector parameters at all times.

, meaning that our to be determined Fourier transform coefficients are required to be perpendicular to the wave number vector parameters at all times.

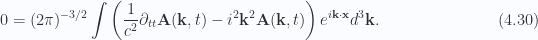

The remainder of Maxwell’s equations, (3.23) impose the addition constraint on the Fourier transform

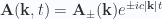

For zero equality for all  it appears that we require the Fourier transforms

it appears that we require the Fourier transforms  to be harmonic in time

to be harmonic in time

This has the familiar exponential solutions

also subject to a requirement that  . Our field, where the

. Our field, where the  are to be determined by initial time conditions, is by (2.7) of the form

are to be determined by initial time conditions, is by (2.7) of the form

Since  , we have

, we have  . This allows for factoring out of

. This allows for factoring out of  . The structure of the solution is not changed by incorporating the

. The structure of the solution is not changed by incorporating the  factors into

factors into  , leaving the field having the general form

, leaving the field having the general form

The original meaning of  as Fourier transforms of the vector potential is obscured by the tidy up change to absorb

as Fourier transforms of the vector potential is obscured by the tidy up change to absorb  , but the geometry of the solution is clearer this way.

, but the geometry of the solution is clearer this way.

It is also particularly straightforward to confirm that  separately for either half of (4.34).

separately for either half of (4.34).

Case III. Non-zero scalar potential. No gauge transformation.

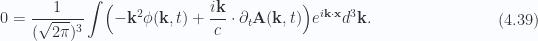

Now lets work from (3.11). In particular, a divergence operation can be factored from (3.11), for

Right off the top, there is a requirement for

In terms of the Fourier transforms this is

Are there any ways for this to equal a constant for all  without requiring that constant to be zero? Assuming no for now, and that this constant must be zero, this implies a coupling between the

without requiring that constant to be zero? Assuming no for now, and that this constant must be zero, this implies a coupling between the  and

and  Fourier transforms of the form

Fourier transforms of the form

A secondary implication is that  or else

or else  is not a scalar. We had a transverse solution by requiring via gauge transformation that

is not a scalar. We had a transverse solution by requiring via gauge transformation that  , and here we have instead the vector potential in the propagation direction.

, and here we have instead the vector potential in the propagation direction.

A secondary confirmation that this is a required coupling between the scalar and vector potential can be had by evaluating the divergence equation of (4.35)

Rearranging this also produces (4.38). We want to now substitute this relationship into (3.12).

Starting with just the  part we have

part we have

Taking the gradient of this brings down a factor of  for

for

(3.12) in its entirety is now

This isn’t terribly pleasant looking. Perhaps going the other direction. We could write

so that

Note that the gradients here operate on everything to the right, including and especially the exponential. Each application of the gradient brings down an additional  factor, and we have

factor, and we have

This is identically zero, so we see that this second equation provides no additional information. That is somewhat surprising since there is not a whole lot of constraints supplied by the first equation. The function  can be anything. Understanding of this curiosity comes from computation of the Faraday bivector itself. From (2.7), that is

can be anything. Understanding of this curiosity comes from computation of the Faraday bivector itself. From (2.7), that is

All terms cancel, so we see that a non-zero  leads to

leads to  , as was the case when considering (4.24) (a case that also resulted in

, as was the case when considering (4.24) (a case that also resulted in  ).

).

Can this Fourier representation lead to a non-transverse solution to Maxwell’s equation? If so, it is not obvious how.

The energy momentum tensor

The energy momentum tensor is then

Observing that  commutes with spatial bivectors and anticommutes with spatial vectors, and writing

commutes with spatial bivectors and anticommutes with spatial vectors, and writing  , the tensor splits neatly into scalar and spatial vector components

, the tensor splits neatly into scalar and spatial vector components

In particular for  , we have

, we have

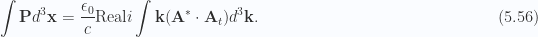

Integrating this over all space and identification of the delta function

reduces the tensor to a single integral in the continuous angular wave number space of  .

.

Or,

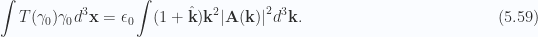

Multiplying out (5.53) yields for

Recall that the only non-trivial solution we found for the assumed Fourier transform representation of  was for

was for  ,

,  . Thus we have for the energy density integrated over all space, just

. Thus we have for the energy density integrated over all space, just

Observe that we have the structure of a Harmonic oscillator for the energy of the radiation system. What is the canonical momentum for this system? Will it correspond to the Poynting vector, integrated over all space?

Let’s reduce the vector component of (5.53), after first imposing the  , and

, and  conditions used to above for our harmonic oscillator form energy relationship. This is

conditions used to above for our harmonic oscillator form energy relationship. This is

This is just

Recall that the Fourier transforms for the transverse propagation case had the form  , where the minus generated the advanced wave, and the plus the receding wave. With substitution of the vector potential for the advanced wave into the energy and momentum results of (5.55) and (5.56) respectively, we have

, where the minus generated the advanced wave, and the plus the receding wave. With substitution of the vector potential for the advanced wave into the energy and momentum results of (5.55) and (5.56) respectively, we have

After a somewhat circuitous route, this has the relativistic symmetry that is expected. In particular the for the complete  tensor we have after integration over all space

tensor we have after integration over all space

The receding wave solution would give the same result, but directed as  instead.

instead.

Observe that we also have the four divergence conservation statement that is expected

This follows trivially since both the derivatives are zero. If the integration region was to be more specific instead of a  relationship, we’d have the power flux

relationship, we’d have the power flux  equal in magnitude to the momentum change through a bounding surface. For a more general surface the time and spatial dependencies shouldn’t necessarily vanish, but we should still have this radiation energy momentum conservation.

equal in magnitude to the momentum change through a bounding surface. For a more general surface the time and spatial dependencies shouldn’t necessarily vanish, but we should still have this radiation energy momentum conservation.

References

[1] Peeter Joot. Electrodynamic field energy for vacuum. [online]. http://sites.google.com/site/peeterjoot/math2009/fourierMaxVac.pdf.

[2] Peeter Joot. {Energy and momentum for Complex electric and magnetic field phasors.} [online]. http://sites.google.com/site/peeterjoot/math2009/complexFieldEnergy.pdf.

[3] D. Bohm. Quantum Theory. Courier Dover Publications, 1989.

for the reflected wave, we have

for the transmitted wave, we have

or

waves in the

directions the boundary value conditions at

require the equality of the

and

components of

, those components are

. It turns out that all of these solutions correspond to the same physical waves. Let’s look at each in turn

. The system eq. 1.0.5.5 is reduced to

. The system eq. 1.0.5.5 is reduced to

, and

. The resulting

and

are unchanged relative to those of the

solution above.

. The system eq. 1.0.5.5 is reduced to

, and

, so the transmitted phasor magnitude change of sign does not change

relative to that of the

solution above.

. The system eq. 1.0.5.5 is reduced to

, and

. In this final variation the reflected phasor magnitude change of sign does not change

relative to that of the

solution.

along

, the polarization of the incident wave is maintained regardless of TE or TM labeling in this example, since our system is symmetric with respect to rotation.