Understanding how to apply Stokes theorem to higher dimensional spaces, non-Euclidean metrics, and with curvilinear coordinates has been a long standing goal.

A traditional answer to these questions can be found in the formalism of differential forms, as covered for example in [2], and [8]. However, both of those texts, despite their small size, are intensely scary. I also found it counter intuitive to have to express all physical quantities as forms, since there are many times when we don’t have any pressing desire to integrate these.

Later I encountered Denker’s straight wire treatment [1], which states that the geometric algebra formulation of Stokes theorem has the form

This is simple enough looking, but there are some important details left out. In particular the grades do not match, so there must be some sort of implied projection or dot product operations too. We also need to understand how to express the hypervolume and hypersurfaces when evaluating these integrals, especially when we want to use curvilinear coordinates.

I’d attempted to puzzle through these details previously. A collection of these attempts, to be removed from my collection of geometric algebra notes, can be found in [4]. I’d recently reviewed all of these and wrote a compact synopsis [5] of all those notes, but in the process of doing so, I realized there was a couple of fundamental problems with the approach I had used.

One detail that was that I failed to understand, was that we have a requirement for treating a infinitesimal region in the proof, then summing over such regions to express the boundary integral. Understanding that the boundary integral form and its dot product are both evaluated only at the end points of the integral region is an important detail that follows from such an argument (as used in proof of Stokes theorem for a 3D Cartesian space in [7].)

I also realized that my previous attempts could only work for the special cases where the dimension of the integration volume also equaled the dimension of the vector space. The key to resolving this issue is the concept of the tangent space, and an understanding of how to express the projection of the gradient onto the tangent space. These concepts are covered thoroughly in [6], which also introduces Stokes theorem as a special case of a more fundamental theorem for integration of geometric algebraic objects. My objective, for now, is still just to understand the generalization of Stokes theorem, and will leave the fundamental theorem of geometric calculus to later.

Now that these details are understood, the purpose of these notes is to detail the Geometric algebra form of Stokes theorem, covering its generalization to higher dimensional spaces and non-Euclidean metrics (i.e. especially those used for special relativity and electromagnetism), and understanding how to properly deal with curvilinear coordinates. This generalization has the form

Theorem 1. Stokes’ Theorem

For blades , and

volume element

,

Here the volume integral is over a dimensional surface (manifold),

is the projection of the gradient onto the tangent space of the manifold, and

indicates integration over the boundary of

.

It takes some work to give this more concrete meaning. I will attempt to do so in a gradual fashion, and provide a number of examples that illustrate some of the relevant details.

Basic notation

A finite vector space, not necessarily Euclidean, with basis will be assumed to be the generator of the geometric algebra. A dual or reciprocal basis

for this basis can be calculated, defined by the property

This is an Euclidean space when .

To select from a multivector the grade

portion, say

we write

The scalar portion of a multivector will be written as

The grade selection operators can be used to define the outer and inner products. For blades , and

of grade

and

respectively, these are

Written out explicitly for odd grade blades (vector, trivector, …), and vector

the dot and wedge products are respectively

Similarly for even grade blades these are

It will be useful to employ the cyclic scalar reordering identity for the scalar selection operator

For an dimensional vector space, a product of

orthonormal (up to a sign) unit vectors is referred to as a pseudoscalar for the space, typically denoted by

The pseudoscalar may commute or anticommute with other blades in the space. We may also form a pseudoscalar for a subspace spanned by vectors by unit scaling the wedge products of those vectors

.

Curvilinear coordinates

For our purposes a manifold can be loosely defined as a parameterized surface. For example, a 2D manifold can be considered a surface in an dimensional vector space, parameterized by two variables

Note that the indices here do not represent exponentiation. We can construct a basis for the manifold as

On the manifold we can calculate a reciprocal basis , defined by requiring, at each point on the surface

Associated implicitly with this basis is a curvilinear coordinate representation defined by the projection operation

(sums over mixed indices are implied). These coordinates can be calculated by taking dot products with the reciprocal frame vectors

In this document all coordinates are with respect to a specific curvilinear basis, and not with respect to the standard basis or its dual basis unless otherwise noted.

Similar to the usual notation for derivatives with respect to the standard basis coordinates we form a lower index partial derivative operator

so that when the complete vector space is spanned by the gradient has the curvilinear representation

This can be motivated by noting that the directional derivative is defined by

When the basis does not span the space, the projection of the gradient onto the tangent space at the point of evaluation

This is called the vector derivative.

See [6] for a more complete discussion of the gradient and vector derivatives in curvilinear coordinates.

Green’s theorem

Given a two parameter () surface parameterization, the curvilinear coordinate representation of a vector

has the form

We assume that the vector space is of dimension two or greater but otherwise unrestricted, and need not have an Euclidean basis. Here denotes the rejection of

from the tangent space at the point of evaluation. Green’s theorem relates the integral around a closed curve to an “area” integral on that surface

Theorem 2. Green’s Theorem

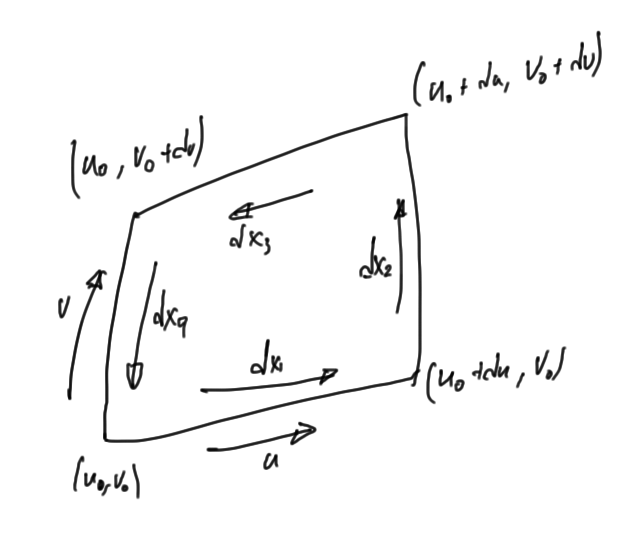

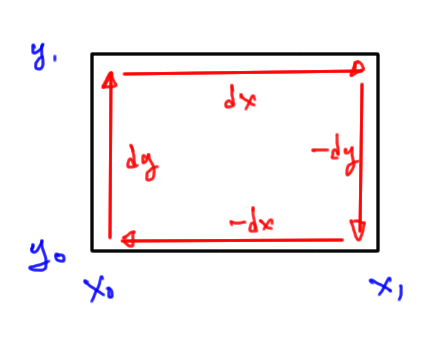

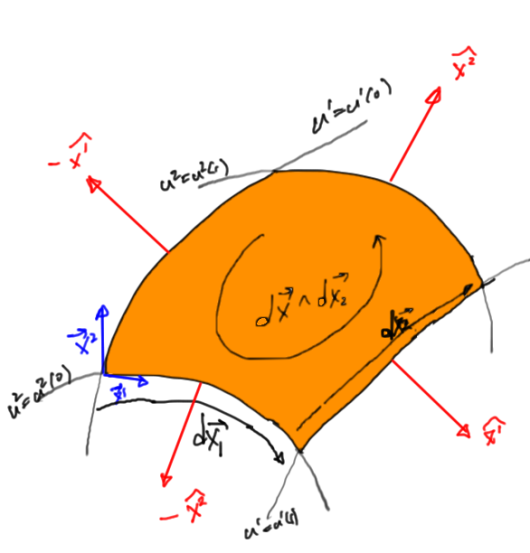

Following the arguments used in [7] for Stokes theorem in three dimensions, we first evaluate the loop integral along the differential element of the surface at the point evaluated over the range

, as shown in the infinitesimal loop of fig. 1.1.

Over the infinitesimal area, the loop integral decomposes into

where the differentials along the curve are

It is assumed that the parameterization change is small enough that this loop integral can be considered planar (regardless of the dimension of the vector space). Making use of the fact that

for

, the loop integral is

With the distances being infinitesimal, these differences can be rewritten as partial differentials

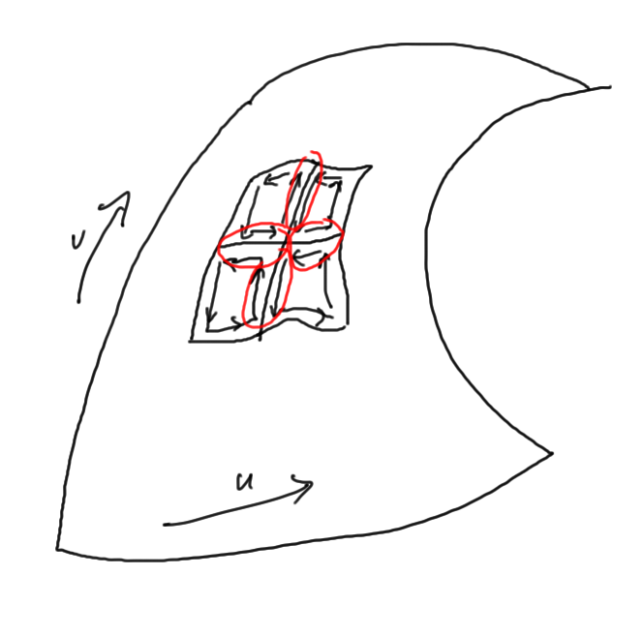

We can now sum over a larger area as in fig. 1.2

All the opposing oriented loop elements cancel, so the integral around the complete boundary of the surface is given by the

area integral of the partials difference.

We will see that Green’s theorem is a special case of the Curl (Stokes) theorem. This observation will also provide a geometric interpretation of the right hand side area integral of thm. 2, and allow for a coordinate free representation.

Special case:

An important special case of Green’s theorem is for a Euclidean two dimensional space where the vector function is

Here Green’s theorem takes the form

Curl theorem, two volume vector field

Having examined the right hand side of thm. 1 for the very simplest geometric object , let’s look at the right hand side, the area integral in more detail. We restrict our attention for now to vectors

still defined by eq. 1.19.

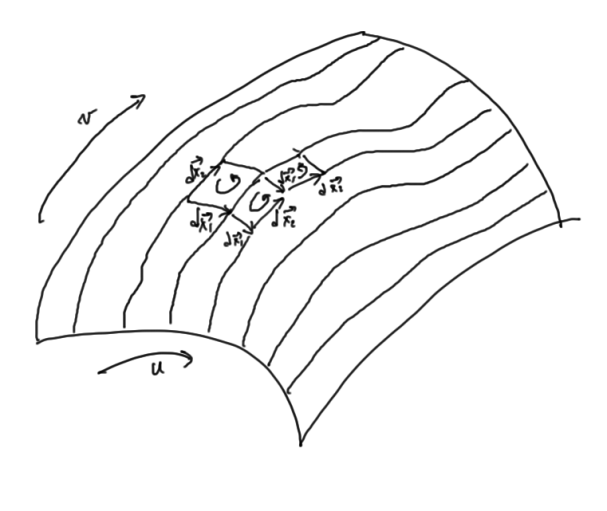

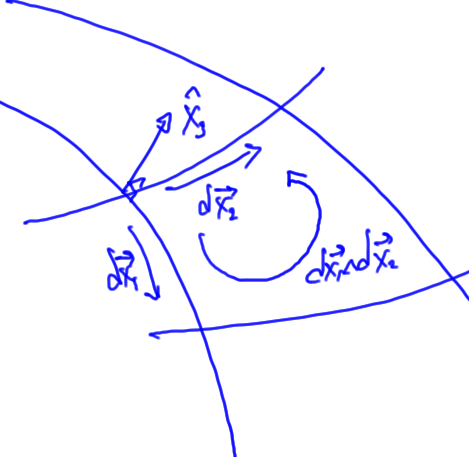

First we need to assign a meaning to . By this, we mean the wedge products of the two differential elements. With

that area element is

This is the oriented area element that lies in the tangent plane at the point of evaluation, and has the magnitude of the area of that segment of the surface, as depicted in fig. 1.3.

Observe that we have no requirement to introduce a normal to the surface to describe the direction of the plane. The wedge product provides the information about the orientation of the place in the space, even when the vector space that our vector lies in has dimension greater than three.

Proceeding with the expansion of the dot product of the area element with the curl, using eq. 1.0.6, eq. 1.0.7, and eq. 1.0.8, and a scalar selection operation, we have

Let’s proceed to expand the inner dot product

The complete curl term is thus

This almost has the form of eq. 1.23, although that is not immediately obvious. Working backwards, using the shorthand , we can show that this coordinate representation can be eliminated

This relates the two parameter surface integral of the curl to the loop integral over its boundary

This is the very simplest special case of Stokes theorem. When written in the general form of Stokes thm. 1

we must remember (the is to remind us of this) that it is implied that both the vector

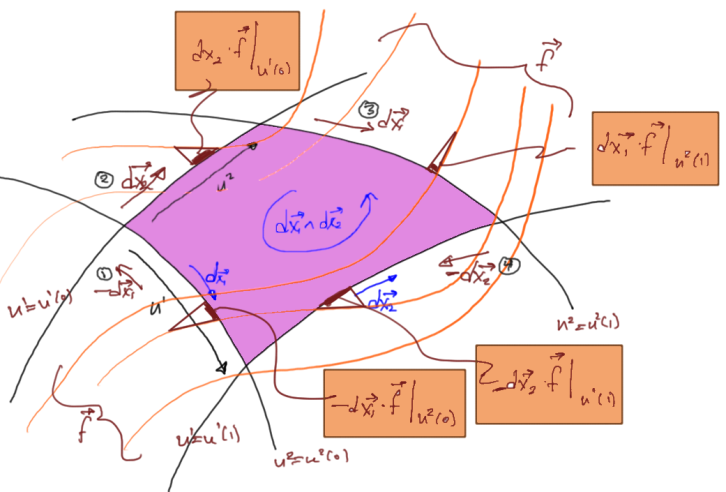

and the differential elements are evaluated on the boundaries of the integration ranges respectively. A more exact statement is

Expanded out in full this is

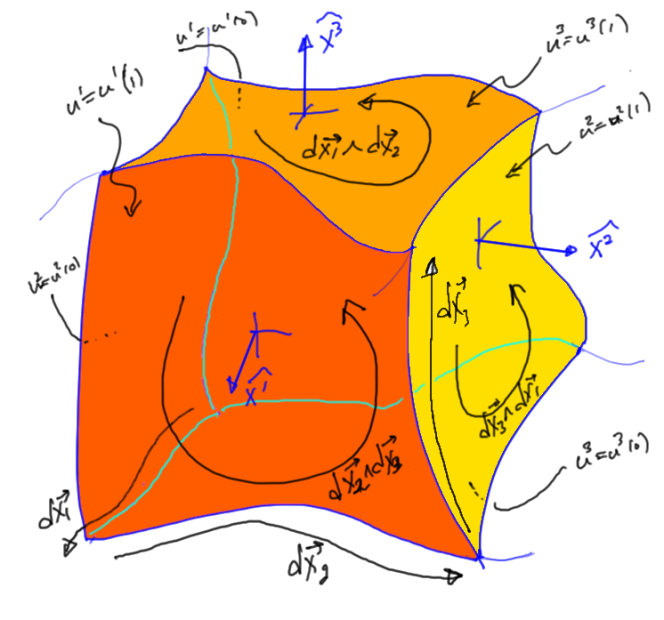

which can be cross checked against fig. 1.4 to demonstrate that this specifies a clockwise orientation. For the surface with oriented area , the clockwise loop is designated with line elements (1)-(4), we see that the contributions around this loop (in boxes) match eq. 1.0.35.

Example: Green’s theorem, a 2D Cartesian parameterization for a Euclidean space

For a Cartesian 2D Euclidean parameterization of a vector field and the integration space, Stokes theorem should be equivalent to Green’s theorem eq. 1.0.25. Let’s expand both sides of eq. 1.0.32 independently to verify equality. The parameterization is

Here the dual basis is the basis, and the projection onto the tangent space is just the gradient

The volume element is an area weighted pseudoscalar for the space

and the curl of a vector is

So, the LHS of Stokes theorem takes the coordinate form

For the RHS, following fig. 1.5, we have

As expected, we can also obtain this by integrating eq. 1.0.38.

Example: Cylindrical parameterization

Let’s now consider a cylindrical parameterization of a 4D space with Euclidean metric or Minkowski metric

. For such a space let’s do a brute force expansion of both sides of Stokes theorem to gain some confidence that all is well.

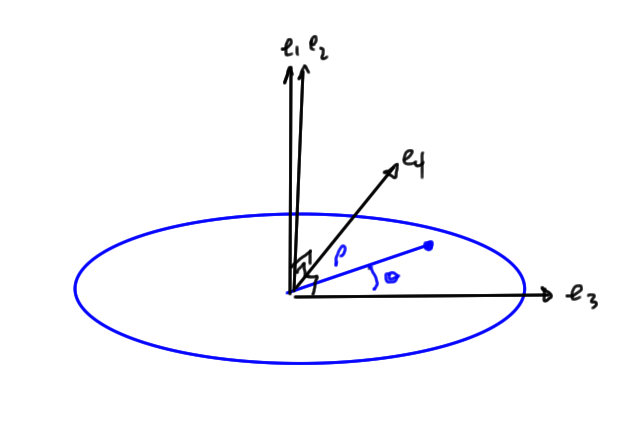

With , such a space is conveniently parameterized as illustrated in fig. 1.6 as

Note that the Euclidean case where rejection of the non-axial components of

expands to

whereas for the Minkowski case where we have a hyperbolic expansion

Within such a space consider the surface along , for which the vectors are parameterized by

The tangent space unit vectors are

and

Observe that both of these vectors have their origin at the point of evaluation, and aren’t relative to the absolute origin used to parameterize the complete space.

We wish to compute the volume element for the tangent plane. Noting that and

both anticommute with

we have for

so

The tangent space volume element is thus

With the tangent plane vectors both perpendicular we don’t need the general lemma 6 to compute the reciprocal basis, but can do so by inspection

and

Observe that the latter depends on the metric signature.

The vector derivative, the projection of the gradient on the tangent space, is

From this we see that acting with the vector derivative on a scalar radial only dependent function is a vector function that has a radial direction, whereas the action of the vector derivative on an azimuthal only dependent function

is a vector function that has only an azimuthal direction. The interpretation of the geometric product action of the vector derivative on a vector function is not as simple since the product will be a multivector.

Expanding the curl in coordinates is messier, but yields in the end when tackled with sufficient care

After all this reduction, we can now state in coordinates the LHS of Stokes theorem explicitly

Now compare this to the direct evaluation of the loop integral portion of Stokes theorem. Expressing this using eq. 1.0.34, we have the same result

This example highlights some of the power of Stokes theorem, since the reduction of the volume element differential form was seen to be quite a chore (and easy to make mistakes doing.)

Example: Composition of boost and rotation

Working in a space with basis

where

and

, an active composition of boost and rotation has the form

where is a bivector of a timelike unit vector and perpendicular spacelike unit vector, and

is a bivector of two perpendicular spacelike unit vectors. For example,

and

. For such

the respective Lorentz transformation matrices are

and

Let’s calculate the tangent space vectors for this parameterization, assuming that the particle is at an initial spacetime position of . That is

To calculate the tangent space vectors for this subspace we note that

and

The tangent space vectors are therefore

Continuing a specific example where let’s also pick

, the spacetime position of a particle at the origin of a frame at that frame’s

. The tangent space vectors for the subspace parameterized by this transformation and this initial position is then reduced to

and

By inspection the dual basis for this parameterization is

So, Stokes theorem, applied to a spacetime vector , for this subspace is

Since the point is to avoid the curl integral, we did not actually have to state it explicitly, nor was there any actual need to calculate the dual basis.

Example: Dual representation in three dimensions

It’s clear that there is a projective nature to the differential form . This projective nature allows us, in three dimensions, to re-express Stokes theorem using the gradient instead of the vector derivative, and to utilize the cross product and a normal direction to the plane.

When we parameterize a normal direction to the tangent space, so that for a 2D tangent space spanned by curvilinear coordinates and

the vector

is normal to both, we can write our vector as

and express the orientation of the tangent space area element in terms of a pseudoscalar that includes this normal direction

Inserting this into an expansion of the curl form we have

Observe that this last term, the contribution of the component of the gradient perpendicular to the tangent space, has no components

leaving

Now scale the normal vector and its dual to have unit norm as follows

so that for , the volume element can be

This scaling choice is illustrated in fig. 1.7, and represents the “outwards” normal. With such a scaling choice we have

and almost have the desired cross product representation

With the duality identity , we have the traditional 3D representation of Stokes theorem

Note that the orientation of the loop integral in the traditional statement of the 3D Stokes theorem is counterclockwise instead of clockwise, as written here.

Stokes theorem, three variable volume element parameterization

We can restate the identity of thm. 1 in an equivalent dot product form.

Here , with the implicit assumption that it and the blade

that it is dotted with, are both evaluated at the end points of integration variable

that has been integrated against.

We’ve seen one specific example of this above in the expansions of eq. 1.28, and eq. 1.29, however, the equivalent result of eq. 1.0.78, somewhat magically, applies to any degree blade and volume element provided the degree of the blade is less than that of the volume element (i.e. ). That magic follows directly from lemma 1.

As an expositional example, consider a three variable volume element parameterization, and a vector blade

It should not be surprising that this has the structure found in the theory of differential forms. Using the differentials for each of the parameterization “directions”, we can write this dot product expansion as

Observe that the sign changes with each element of that is skipped. In differential forms, the wedge product composition of 1-forms is an abstract quantity. Here the differentials are just vectors, and their wedge product represents an oriented volume element. This interpretation is likely available in the theory of differential forms too, but is arguably less obvious.

Digression

As was the case with the loop integral, we expect that the coordinate representation has a representation that can be expressed as a number of antisymmetric terms. A bit of experimentation shows that such a sum, after dropping the parameter space volume element factor, is

To proceed with the integration, we must again consider an infinitesimal volume element, for which the partial can be evaluated as the difference of the endpoints, with all else held constant. For this three variable parameterization, say, , let’s delimit such an infinitesimal volume element by the parameterization ranges

,

,

. The integral is

Extending this over the ranges ,

,

, we have proved Stokes thm. 1 for vectors and a three parameter volume element, provided we have a surface element of the form

where the evaluation of the dot products with are also evaluated at the same points.

Example: Euclidean spherical polar parameterization of 3D subspace

Consider an Euclidean space where a 3D subspace is parameterized using spherical coordinates, as in

The tangent space basis for the subspace situated at some fixed , is easy to calculate, and is found to be

While we can use the general relation of lemma 7 to compute the reciprocal basis. That is

However, a naive attempt at applying this without algebraic software is a route that requires a lot of care, and is easy to make mistakes doing. In this case it is really not necessary since the tangent space basis only requires scaling to orthonormalize, satisfying for

This allows us to read off the dual basis for the tangent volume by inspection

Should we wish to explicitly calculate the curl on the tangent space, we would need these. The area and volume elements are also messy to calculate manually. This expansion can be found in the Mathematica notebook \nbref{sphericalSurfaceAndVolumeElements.nb}, and is

Those area elements have a Geometric algebra factorization that are perhaps useful

One of the beauties of Stokes theorem is that we don’t actually have to calculate the dual basis on the tangent space to proceed with the integration. For that calculation above, where we had a normal tangent basis, I still used software was used as an aid, so it is clear that this can generally get pretty messy.

To apply Stokes theorem to a vector field we can use eq. 1.0.82 to write down the integral directly

Observe that eq. 1.0.90 is a vector valued integral that expands to

This could easily be a difficult integral to evaluate since the vectors evaluated at the endpoints are still functions of two parameters. An easier integral would result from the application of Stokes theorem to a bivector valued field, say

, for which we have

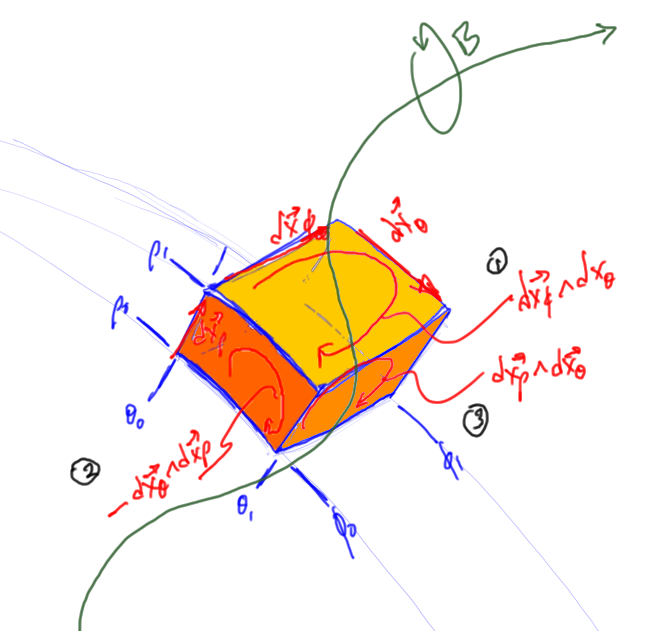

There is a geometric interpretation to these oriented area integrals, especially when written out explicitly in terms of the differentials along the parameterization directions. Pulling out a sign explicitly to match the geometry (as we had to also do for the line integrals in the two parameter volume element case), we can write this as

When written out in this differential form, each of the respective area elements is an oriented area along one of the faces of the parameterization volume, much like the line integral that results from a two parameter volume curl integral. This is visualized in fig. 1.8. In this figure, faces (1) and (3) are “top faces”, those with signs matching the tops of the evaluation ranges eq. 1.0.94, whereas face (2) is a bottom face with a sign that is correspondingly reversed.

Example: Minkowski hyperbolic-spherical polar parameterization of 3D subspace

Working with a three parameter volume element in a Minkowski space does not change much. For example in a 4D space with , we can employ a hyperbolic-spherical parameterization similar to that used above for the 4D Euclidean space

This has tangent space basis elements

This is a normal basis, but again not orthonormal. Specifically, for we have

where we see that the radial vector is timelike. We can form the dual basis again by inspection

The area elements are

or

The volume element also reduces nicely, and is

The area and volume element reductions were once again messy, done in software using \nbref{sphericalSurfaceAndVolumeElementsMinkowski.nb}. However, we really only need eq. 1.0.96 to perform the Stokes integration.

Stokes theorem, four variable volume element parameterization

Volume elements for up to four parameters are likely of physical interest, with the four volume elements of interest for relativistic physics in spaces. For example, we may wish to use a parameterization

, with a four volume

We follow the same procedure to calculate the corresponding boundary surface “area” element (with dimensions of volume in this case). This is

Our boundary value surface element is therefore

where it is implied that this (and the dot products with ) are evaluated on the boundaries of the integration ranges of the omitted index. This same boundary form can be used for vector, bivector and trivector variations of Stokes theorem.

Duality and its relation to the pseudoscalar.

Looking to eq. 1.0.181 of lemma 6, and scaling the wedge product by its absolute magnitude, we can express duality using that scaled bivector as a pseudoscalar for the plane that spans

. Let’s introduce a subscript notation for such scaled blades

This allows us to express the unit vector in the direction of as

Following the pattern of eq. 1.0.181, it is clear how to express the dual vectors for higher dimensional subspaces. For example

or for the unit vector in the direction of ,

Divergence theorem.

When the curl integral is a scalar result we are able to apply duality relationships to obtain the divergence theorem for the corresponding space. We will be able to show that a relationship of the following form holds

Here is a vector,

is normal to the boundary surface, and

is the area of this bounding surface element. We wish to quantify these more precisely, especially because the orientation of the normal vectors are metric dependent. Working a few specific examples will show the pattern nicely, but it is helpful to first consider some aspects of the general case.

First note that, for a scalar Stokes integral we are integrating the vector derivative curl of a blade over a k-parameter volume element. Because the dimension of the space matches the number of parameters, the projection of the gradient onto the tangent space is exactly that gradient

Multiplication of by the pseudoscalar will always produce a vector. With the introduction of such a dual vector, as in

Stokes theorem takes the form

or

where we will see that the vector can roughly be characterized as a normal to the boundary surface. Using primes to indicate the scope of the action of the gradient, cyclic permutation within the scalar selection operator can be used to factor out the pseudoscalar

The second last step uses lemma 8, and the last writes , where we have assumed (without loss of generality) that

has the same orientation as the pseudoscalar for the space. We also assume that the parameterization is non-degenerate over the integration volume (i.e. no

), so the sign of this product cannot change.

Let’s now return to the normal vector . With

(the

indexed differential omitted), and

, we have

We’ve seen in eq. 1.0.106 and lemma 7 that the dual of vector with respect to the unit pseudoscalar

in a subspace spanned by

is

or

This allows us to write

where , and

is the area of the boundary area element normal to

. Note that the

term will now cancel cleanly from both sides of the divergence equation, taking both the metric and the orientation specific dependencies with it.

This leaves us with

To spell out the details, we have to be very careful with the signs. However, that is a job best left for specific examples.

Example: 2D divergence theorem

Let’s start back at

On the left our integral can be rewritten as

where and we pick the pseudoscalar with the same orientation as the volume (area in this case) element

.

For the boundary form we have

The duality relations for the tangent space are

or

Back substitution into the line element gives

Writing (no sum) , we have

This provides us a divergence and normal relationship, with terms on each side that can be canceled. Restoring explicit range evaluation, that is

Let’s consider this graphically for an Euclidean metric as illustrated in fig. 1.9.

We see that

- along

the outwards normal is

,

- along

the outwards normal is

,

- along

the outwards normal is

, and

- along

the outwards normal is

.

Writing that outwards normal as , we have

Note that we can use the same algebraic notion of outward normal for non-Euclidean spaces, although cannot expect the geometry to look anything like that of the figure.

Example: 3D divergence theorem

As with the 2D example, let’s start back with

In a 3D space, the pseudoscalar commutes with all grades, so we have

where , and we have used a pseudoscalar with the same orientation as the volume element

In the boundary integral our dual two form is

where , and

Observe that we can do a cyclic permutation of a 3 blade without any change of sign, for example

Because of this we can write the dual two form as we expressed the normals in lemma 7

We can now state the 3D divergence theorem, canceling out the metric and orientation dependent term on both sides

where (sums implied)

and

The outwards normals at the upper integration ranges of a three parameter surface are depicted in fig. 1.10.

This sign alternation originates with the two form elements from the Stokes boundary integral, which were explicitly evaluated at the endpoints of the integral. That is, for

,

In the context of the divergence theorem, this means that we are implicitly requiring the dot products to be evaluated specifically at the end points of the integration where

, accounting for the alternation of sign required to describe the normals as uniformly outwards.

Example: 4D divergence theorem

Applying Stokes theorem to a trivector in the 4D case we find

Here the pseudoscalar has been picked to have the same orientation as the hypervolume element . Writing

the dual of the three form is

Here, we’ve written

Observe that the dual representation nicely removes the alternation of sign that we had in the Stokes theorem boundary integral, since each alternation of the wedged vectors in the pseudoscalar changes the sign once.

As before, we define the outwards normals as on the upper and lower integration ranges respectively. The scalar area elements on these faces can be written in a dual form

so that the 4D divergence theorem looks just like the 2D and 3D cases

Here we define the volume scaled normal as

As before, we have made use of the implicit fact that the three form (and it’s dot product with ) was evaluated on the boundaries of the integration region, with a toggling of sign on the lower limit of that evaluation that is now reflected in what we have defined as the outwards normal.

We also obtain explicit instructions from this formalism how to compute the “outwards” normal for this surface in a 4D space (unit scaling of the dual basis elements), something that we cannot compute using any sort of geometrical intuition. For free we’ve obtained a result that applies to both Euclidean and Minkowski (or other non-Euclidean) spaces.

Volume integral coordinate representations

It may be useful to formulate the curl integrals in tensor form. For vectors , and bivectors

, the coordinate representations of those differential forms (\cref{pr:stokesTheoremGeometricAlgebraII:1}) are

Here the bivector and trivector

is expressed in terms of their curvilinear components on the tangent space

where

For the trivector components are also antisymmetric, changing sign with any interchange of indices.

Note that eq. 1.0.144d and eq. 1.0.144f appear much different on the surface, but both have the same structure. This can be seen by writing for former as

In both of these we have an alternation of sign, where the tensor index skips one of the volume element indices is sequence. We’ve seen in the 4D divergence theorem that this alternation of sign can be related to a duality transformation.

In integral form (no sum over indexes in

terms), these are

Of these, I suspect that only eq. 1.0.148a and eq. 1.0.148d are of use.

Final remarks

Because we have used curvilinear coordinates from the get go, we have arrived naturally at a formulation that works for both Euclidean and non-Euclidean geometries, and have demonstrated that Stokes (and the divergence theorem) holds regardless of the geometry or the parameterization. We also know explicitly how to formulate both theorems for any parameterization that we choose, something much more valuable than knowledge that this is possible.

For the divergence theorem we have introduced the concept of outwards normal (for example in 3D, eq. 1.0.136), which still holds for non-Euclidean geometries. We may not be able to form intuitive geometrical interpretations for these normals, but do have an algebraic description of them.

Appendix

Problems

Question: Expand volume elements in coordinates

Show that the coordinate representation for the volume element dotted with the curl can be represented as a sum of antisymmetric terms. That is

- (a)Prove eq. 1.0.144a

- (b)Prove eq. 1.0.144b

- (c)Prove eq. 1.0.144c

- (d)Prove eq. 1.0.144d

- (e)Prove eq. 1.0.144e

- (f)Prove eq. 1.0.144f

Answer

(a) Two parameter volume, curl of vector

(b) Three parameter volume, curl of vector

(c) Four parameter volume, curl of vector

(d) Three parameter volume, curl of bivector

(e) Four parameter volume, curl of bivector

To start, we require lemma 3. For convenience lets also write our wedge products as a single indexed quantity, as in for

. The expansion is

This last step uses an intermediate result from the eq. 1.0.152 expansion above, since each of the four terms has the same structure we have previously observed.

(f) Four parameter volume, curl of trivector

Using the shorthand again, the initial expansion gives

Applying lemma 4 to expand the inner products within the braces we have

We can cancel those last terms using lemma 5. Using the same reverse chain rule expansion once more we have

or

The final result follows after permuting the indices slightly.

Some helpful identities

Lemma 1. Distribution of inner products

Given two blades with grades subject to

, and a vector

, the inner product distributes according to

This will allow us, for example, to expand a general inner product of the form .

The proof is straightforward, but also mechanical. Start by expanding the wedge and dot products within a grade selection operator

Solving for in

we have

The last term above is zero since we are selecting the grade element of a multivector with grades

and

, which has no terms for

. Now we can expand the

multivector product, for

The latter multivector (with the wedge product factor) above has grades and

, so this selection operator finds nothing. This leaves

The first dot products term has grade and is selected, whereas the wedge term has grade

(for

).

Lemma 2. Distribution of two bivectors

For vectors ,

, and bivector

, we have

Proof follows by applying the scalar selection operator, expanding the wedge product within it, and eliminating any of the terms that cannot contribute grade zero values

Lemma 3. Inner product of trivector with bivector

Given a bivector , and trivector

where

and

are vectors, the inner product is

This is also problem 1.1(c) from Exercises 2.1 in [3], and submits to a dumb expansion in successive dot products with a final regrouping. With

Lemma 4. Distribution of two trivectors

Given a trivector and three vectors

, and

, the entire inner product can be expanded in terms of any successive set inner products, subject to change of sign with interchange of any two adjacent vectors within the dot product sequence

To show this, we first expand within a scalar selection operator

Now consider any single term from the scalar selection, such as the first. This can be reordered using the vector dot product identity

The vector-trivector product in the latter grade selection operation above contributes only bivector and quadvector terms, thus contributing nothing. This can be repeated, showing that

Substituting this back into eq. 1.0.168 proves lemma 4.

Lemma 5. Permutation of two successive dot products with trivector

Given a trivector and two vectors

and

, alternating the order of the dot products changes the sign

This and lemma 4 are clearly examples of a more general identity, but I’ll not try to prove that here. To show this one, we have

Cancellation of terms above was because they could not contribute to a grade one selection. We also employed the relation for bivector

and vector

.

Lemma 6. Duality in a plane

For a vector , and a plane containing

and

, the dual

of this vector with respect to this plane is

Satisfying

and

To demonstrate, we start with the expansion of

Dotting with we have

but dotting with yields zero

To complete the proof, we note that the product in eq. 1.177 is just the wedge squared

This duality relation can be recast with a linear denominator

or

We can use this form after scaling it appropriately to express duality in terms of the pseudoscalar.

Lemma 7. Dual vector in a three vector subspace

In the subspace spanned by , the dual of

is

Consider the dot product of with

.

The canceled term is eliminated since it is the product of a vector and trivector producing no scalar term. Substituting , and noting that

, we have

Lemma 8. Pseudoscalar selection

For grade blade

(i.e. a pseudoscalar), and vectors

, the grade

selection of this blade sandwiched between the vectors is

To show this, we have to consider even and odd grades separately. First for even we have

or

Similarly for odd , we have

or

Adjusting for the signs completes the proof.

References

[1] John Denker. Magnetic field for a straight wire., 2014. URL http://www.av8n.com/physics/straight-wire.pdf. [Online; accessed 11-May-2014].

[2] H. Flanders. Differential Forms With Applications to the Physical Sciences. Courier Dover Publications, 1989.

[3] D. Hestenes. New Foundations for Classical Mechanics. Kluwer Academic Publishers, 1999.

[4] Peeter Joot. Collection of old notes on Stokes theorem in Geometric algebra, 2014. URL https://sites.google.com/site/peeterjoot3/math2014/bigCollectionOfPartiallyIncorrectStokesTheoremMusings.pdf.

[5] Peeter Joot. Synposis of old notes on Stokes theorem in Geometric algebra, 2014. URL https://sites.google.com/site/peeterjoot3/math2014/synopsisOfBigCollectionOfPartiallyIncorrectStokesTheoremMusings.pdf.

[6] A. Macdonald. Vector and Geometric Calculus. CreateSpace Independent Publishing Platform, 2012.

[7] M. Schwartz. Principles of Electrodynamics. Dover Publications, 1987.

[8] Michael Spivak. Calculus on manifolds, volume 1. Benjamin New York, 1965.